In the digital age, there is a growing need for advanced technologies. It means not only for collecting but especially for analysing data. Companies are accumulating increasing amounts of different information that can improve their efficiency and innovation. Data Engineering offered by BFirst.Tech can play a key role in the process of using data for the benefit of a company. This is an area of sustainable products for effective information management and processing. The article presents one of the opportunities offered by the Data Engineering area. For example the integration of Machine Learning with Data Lakes.

Data Engineering – an area of sustainable products dedicated to collecting, analysing and aggregating data

Data engineering is a process of designing and implementing systems for the effective collection, storage and processing of large sets of data. This supports the accumulation of information such as website traffic analysis, data from IoT sensors or consumer purchasing trends. Firstly, the task of data engineering is to ensure that information is skillfully collected. What is more, it is stored but also easily accessible and ready for analysis. Data can be effectively stored in Lakes, Data Storages and Data Warehouses. Such integrated data sources can be used to create analyses or feed artificial intelligence engines, which ensures comprehensive use of the collected information (see the detailed description of the Data Engineering area (img 1)).

img 1 – Data Engineering

Data lakes used for storing sets of information

Data lakes enable storing a huge amount of raw data in its original, unprocessed format. Thanks to the possibilities offered by Data Engineering, data lakes are capable of accepting and integrating data from a wide variety of sources. For instance, text documents, images, IoT sensor data. It makes it possible to analyse and utilise complex sets of information in one place. The flexibility of data lakes and their ability to integrate diverse types of data make them extremely valuable to organisations facing the challenge of managing and analysing dynamically changing data sets. Unlike Data Warehouses, Data Lakes offer greater versatility in handling a variety of data types, made possible by advanced data processing and management techniques used in Data Engineering. However, that versatility also raises challenges in the area of storing and managing such complex sets of data. It requires data engineers to constantly adapt and implement innovative approaches.[1, 2]

Information processing in data lakes and the application of machine learning

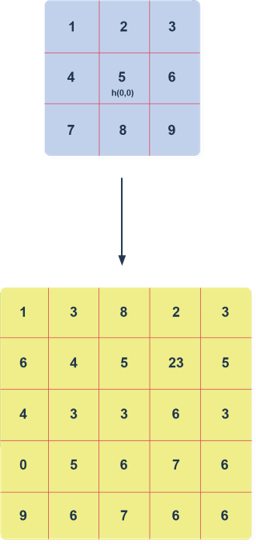

The increasing volume of stored data and its diversity pose a challenge in the area of effective processing and analysis. Traditional methods are often unable to keep up with the growing complexity. What is more, they lead to delays and limitations in accessing key information. Machine Learning, supported by innovations in Data Engineering, can significantly improve those processes. Using extensive data sets, Machine Learning algorithms identify patterns, predict outcomes and automate decisions. Thanks to the integration with Data Lakes (img 2), they can work with a variety of data types. That is to say, structured to unstructured, enabling more complex analyses. Such comprehensiveness enables a more thorough understanding and use of data that would be inaccessible in traditional systems.

Applying Machine Learning to Data Lakes enables deeper analysis and more efficient processing. It facilitates the process by advanced Data Engineering tools and strategies. This enables organisations to transform great amounts of raw data into useful and valuable information. That is important for increasing their operational and strategic efficiency. Moreover, the use of Machine Learning supports the interpretation of collected data and contributes to more informed business decision-making. As a result, companies can adapt to market demands more dynamically, and create data-driven strategies in an innovative way.

img 2 – Data Lakes

Fundamentals of Machine Learning, key techniques and their application

In this paragraph, let’s discuss Machine Learning. as an integral part of the so-called artificial intelligence. It enables information systems to learn and develop based on data. Different types of learning are distinguished in that field: Supervised Learning, Unsupervised Learning and Reinforcement Learning. In Supervised Learning, each type of data is assigned a label or score that allows machines to learn. For example, to recognise patterns and create forecasts. That type of learning is used in image classification or financial forecasting, inter alia. In turn, Unsupervised Learning, in the case of which unlabeled data is used, focuses on finding hidden patterns and is useful in tasks such as grouping elements or detecting anomalies. Reinforcement Learning is based on a system of rewards and punishments. It helps machines to optimise their actions under dynamically changing conditions, e.g. games or automation. [3]

In terms of algorithms, neural networks are excellent for recognising patterns in complex data, such as images or sound. It also forms the basis of many advanced AI systems. Decision trees are used for classification and predictive analysis, for example in recommendation systems or sales forecasting. Each of those algorithms has unique applications and can be tailored to the specific needs of a task or problem. As a result, it makes Machine Learning a versatile tool in the world of data.

Examples of applications of Machine Learning

The application of Machine Learning to Data Lakes opens up a wide spectrum of possibilities. We can enumerate from anomaly detection, through personalisation of offers, to optimisation of supply chains. In the financial sector, such algorithms effectively analyse transaction patterns and identify anomalies or potential fraud in real time. That is crucial in preventing financial fraud. In retail and marketing, Machine Learning enables the personalisation of offers to customers. It happens by analysing purchase behaviour and preferences, increasing customer satisfaction and sales efficiency. [4] In industry, the algorithms contribute to the optimisation of supply chains by analysing data from various sources – as weather forecasts or market trends. It helps predicting demand and manage inventory and logistics [5].

They can also be used for pre-design or product optimisation. Another interesting application of Machine Learning in Data Lakes is image analysis. Machine Learning algorithms are able to process and analyse large sets of images and pictures. They are used in fields such as medical diagnostics, where they can help detect and classify lesions in radiological images, or in security systems, where camera image analysis can be used to identify and track objects or people.

CONCLUSIONS

The article emphasises developments in the field of data analytics, highlighting how Machine Learning, Data Lakes and data engineering influence the way organisations process and use information. Introducing such technologies into business improves existing processes and opens the way to new opportunities. The Data Engineering area introduces modernisation into information processing, characterised by greater precision, deeper conclusions and faster decision-making. That progress emphasises the growing value of Data Engineering in the modern business world, which is an important factor in adapting to dynamic market changes and creating data-driven strategies.

References

[1] https://bfirst.tech/data-engineering/

[2] https://www.netsuite.com/portal/resource/articles/data-warehouse/data-lake.shtml

[3] https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained

[4] https://www.tableau.com/learn/articles/machine-learning-examples