Artificial intelligence is one of the most exciting technological developments of recent years. It has the potential to fundamentally change the way we work and use modern technologies in many areas. We talking about text and image generators, various types of algorithms or autonomous cars. However, as the use of artificial intelligence becomes more widespread, it is also good to be aware of the potential problems it brings with it. Given the increasing dependence of our systems on artificial intelligence, how we approach these dilemmas could have a crucial impact on the future image of society. In this article, we will present these moral dilemmas. We will also discuss the problems associated with putting autonomous vehicles on the roads. Next we will jump to the dangers of using artificial intelligence to sow disinformation. Finaly, it will come to te concerns about the intersection of artificial intelligence and art.

The problem of data acquisition and bias

As a rule, human judgements are burdened by a subjective perspective; machines and algorithms are expected to be more objective. However, how machine learning algorithms work depends heavily on the data used to teach the algorithms. Therefore, data selected to train an algorithm with any, even unconscious bias, can cause undesirable actions by the algorithm. Please have a look at our https://bfirst.tech/problemy-w-danych-historycznych-i-zakodowane-uprzedzenia/earlier article for more information on this topic.

Levels of automation in autonomous cars

In recent years, we have seen great progress in the development of autonomous cars. There has been a lot of footage on the web showing prototypes of vehicles moving without the driver’s assistance or even presence. When discussing autonomous cars, it is worth pointing out that there are multiple levels of autonomy. It is worth identifying which level one is referring to before the discussion. [1]

- Level 0 indicates vehicles that require full control of the driver, performing all driving actions (steering, braking acceleration, etc.). However, the vehicle can inform the driver of hazards on the road. It will use systems such as collision warning or lane departure warnings to do so.

- Level 1 includes vehicles that are already common on the road today. The driver is still in control of the vehicle, which is equipped with driving assistance systems such as cruise control or lane-keeping assist.

- Level 2, in addition to having the capabilities of the previous levels, is – under certain conditions – able to take partial control of the vehicle. It can influence the speed or direction of travel, under the constant supervision of the driver. The support functions include controlling the car in traffic jams or on the motorway.

- Level 3 of autonomy refers to vehicles that are not yet commercially available. Cars of this type are able to drive fully autonomously, under the supervision of the driver. The driver still has to be ready to take control of the vehicle if necessary.

- Level 4 means that the on-board computer performs all driving actions, but only on certain previously approved routes. In this situation, all persons in the vehicle act as passengers. Although, it is still possible for a human to take control of the vehicle.

- Level 5 is the highest level of autonomy – the on-board computer is fully responsible for driving the vehicle under all conditions, without any need for human intervention. [2]

Moral dilemmas in the face of autonomous vehicles

Vehicles with autonomy levels 0-2 are not particularly controversial. Technologies such as car control on the motorway are already available and make travelling easier. However, the potential introduction of vehicles with higher autonomy levels into general traffic raises some moral dilemmas. What happens when an autonomous car, under the care of a driver, is involved in an accident. Who is then responsible for causing it? The driver? The vehicle manufacturer? Or perhaps the car itself? There is no clear answer to this question.

Putting autonomous vehicles on the roads also introduces another problem – these vehicles may have security vulnerabilities. Something like this could potentially lead to data leaks or even a hacker taking control of the vehicle. A car taken over in this way could be used to deliberately cause an accident or even carry out a terrorist attack. There is also the problem of dividing responsibility between the manufacturer, the hacker and the user. [3]

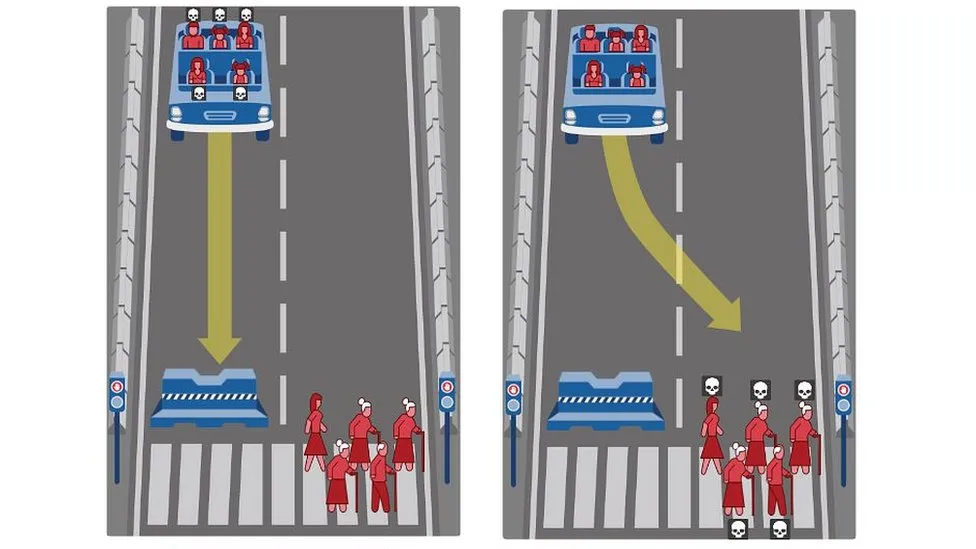

One of the most crucial issues related to autonomous vehicles is the ethical training of vehicles to make decisions. It is expecially important in the event of danger to life and property. Who should make decisions in this regard – software developers, ethicists and philosophers, or perhaps country leaders? These decisions will affect who survives in the event of an unavoidable accident. Many of the situations that autonomous vehicles may encounter will require decisions that do not have one obvious answer (Figure 1). Should the vehicle prioritise saving pedestrians or passengers, the young or the elderly? How important is it for the vehicle not to interfere with the course of events? Should compliance with the law by the other party to the accident influence the decision? [4]

Fig. 1. An illustration of one of the situations that autonomous vehicles may encounter. Source: https://www.moralmachine.net/

Deepfake – what is it and why does it lead to misinformation?

Contemporary man using modern technology is bombarded with information from everywhere. The sheer volume and speed of information delivery means that not all of it can be verified. This fact enables those fabricating fake information to reach a relatively large group of people. This allows them to manipulate their victims into changing their minds about a certain subject or even attempt to deceive them. Practice like this has been around for some time but it did not give us such moral dilemmas. The advent of artificial intelligence dramatically simplifies the process of creating fake news and thus allows it to be created and disseminated more quickly.

Among disinformation techniques, artificial intelligence has the potential to be used particularly effectively to produce so-called deepfakes. Deepfake is a technique for manipulating images depicting people, relying on artificial intelligence. With the help of machine learning algorithms, modified images are superimposed on existing source material. Thereby, it is creating realistic videos and images depicting events that never took place. Until now, the technology mainly allowed for the processing of static images, and video editing was far more difficult to perform. The popularisation of artificial intelligence has dissolved these technical barriers, which has translated into a drastic increase in the frequency of this phenomenon. [5]

Video 1. Deepfake in the form of video footage using the image of President Obama.

Moral dilemmas associated with deepfakes

Deepfake could be used to achieve a variety of purposes. The technology could be used for harmless projects, for example educational materials such as the video showing President Obama warning about the dangers of deepfakes (see Figure 2). Alongside this, it finds applications in the entertainment industry, such as the use of digital replicas of actors (although this application can raise moral dilemmas), an example of which is the use of a digital likeness of the late actor Peter Cushing to play the role of Grand Moff Tarkin in the film Rogue One: A Star Wars Story (see Figure 2).

Fig. 2. A digital replica of actor Peter Cushing as Grand Moff Tarkin. Source: https://screenrant.com/star-wars-rogue-one-tarkin-ilm-peter-cushing-video/

However, there are also many other uses of deepfakes that have the potential to pose a serious threat to the public. Such fabricated videos can be used to disgrace a person, for example by using their likeness in pornographic videos. Fake content can also be used in all sorts of scams, such as attempts to extort money. An example of such use is the case of a doctor whose image was used in an advertisement for cardiac pseudo-medications, which we cited in a previous article [6]. There is also a lot of controversy surrounding the use of deepfakes for the purpose of sowing disinformation, particularly in the area of politics. Used successfully, fake content can lead to diplomatic incidents, change the public’s reaction to certain political topics, discredit politicians and even influence election results. [7]

By its very nature, the spread of deepfakes is not something that can be easily prevented. Legal solutions are not fully effective due to the global scale of the problem and the nature of social network operation. Other proposed solutions to the problem include developing algorithms to detect fabricated content and educating the public about it.

AI-generated art

There are currently many AI-based text, image or video generators on the market. Midjourney, DALL-E, Stable Diffusion and many others, despite the different implementations and algorithms underlying them, have one thing in common – they require huge amounts of data which, due to their size, can be obtained only from the Internet – often without the consent of the authors of these works. As a result, a number of artists and companies have decided to file lawsuits against the companies developing artificial intelligence models. According to the plaintiffs, the latter are illegally using millions of copyrighted images retrieved from the Internet. Up till now, he most high-profile lawsuit is the one filed by Getty Images – an agency that offers images for business use – against Stability AI, creators of the open-source image generator Stable Diffusion. The agency accuses Stability AI of copying more than 12 million images from their database without prior consent or compensation (see Figure 3). The outcome of this and other legal cases related to AI image generation will shape the future applications and possibilities of this technology. [8]

Fig. 3. An illustration used in Getty Images’ lawsuit showing an original photograph and a similar image with a visible Getty Images watermark generated by Stable Diffusion. Source: https://www.theverge.com/2023/2/6/23587393/ai-art-copyright-lawsuit-getty-images-stable-diffusion

In addition to the legal problems of training generative models on the basis of copyrighted data, there are also moral dilemmas about artworks made with artificial intelligence. [9]

Will AI replace artists?

Many artists believe that artificial intelligence cannot replicate the emotional aspects of art that works by humans offer. When we watch films, listen to music and play games, we feel certain emotions that algorithms cannot give us. They are not creative in the same way that humans are. There are also concerns about the financial situation of many artists. These occur both due to not being compensated for the created works that are in the training collections of the algorithms, and because of the reduced number of commissions due to the popularity and ease of use of the generators. [10]

On the other hand, some artists believe that artificial intelligence’s different way of “thinking” is an asset. It can create works that humans are unable to produce. This is one way in which generative models can become another tool in the hands of artists. With them they will be able to create art forms and genres that have not existed before, expanding human creativity.

The popularity and possibilities of generative artificial intelligence continue to grow. Consequently, there are numerous debates about the legal and ethical issues surrounding this technology. It has the potential to drastically change the way we interact with art.

Conclusions

The appropriate use of artificial intelligence has the potential to become an important and widely used tool in the hands of humanity. It has the potential to increase productivity, facilitate a wide range of activities and expand our creative capabilities. However, the technology carries certain risks that should not be underestimated. Reckless use of autonomous vehicles, AI art or deepfakes can lead to many problems. These can include financial or image losses, but even threats to health and life. Further developments of deepfake detection technologies, new methods of recognising disinformation and fake video footage, as well as new legal solutions and educating the public about the dangers of AI will be important in order to reduce the occurrence of these problems.

References

[1] https://www.nhtsa.gov/vehicle-safety/automated-vehicles-safety

[2] https://blog.galonoleje.pl/pojazdy-autonomiczne-samochody-bez-kierowcow-juz-sa-na-ulicach

[4] https://www.bbc.com/news/technology-45991093

[5] https://studiadesecuritate.uken.krakow.pl/wp-content/uploads/sites/43/2019/10/2-1.pdf

[9] https://www.benchmark.pl/aktualnosci/dzielo-sztucznej-inteligencji-docenione.html